OZU

Search and discovery engine for film and TV. We're building narrative intelligence to understand stories at scale. Our AI understands elements of craft, plot, emotional subtext, etc. enabling new ways of interacting with content.

CVEU Industry Spotlight

Presented CinemaNet, a jointly trained multi-task classification model that codifies a comprehensive taxonomy of cinematography, at the inaugral Creative Video Editing and Understanding workshop.

HBO Max -"The Orbit"

Flagship interactive retail experience for HBO Max & AT&T, in collaboration with HUSH, where users could interact with parts of the HBO archive in real-time with gestures and words.

Infinite Bad Guy

Collaborative project with IYOIYO studio / Google & YouTube. I built custom vision models that helped power the visual intelligence behind the experience.

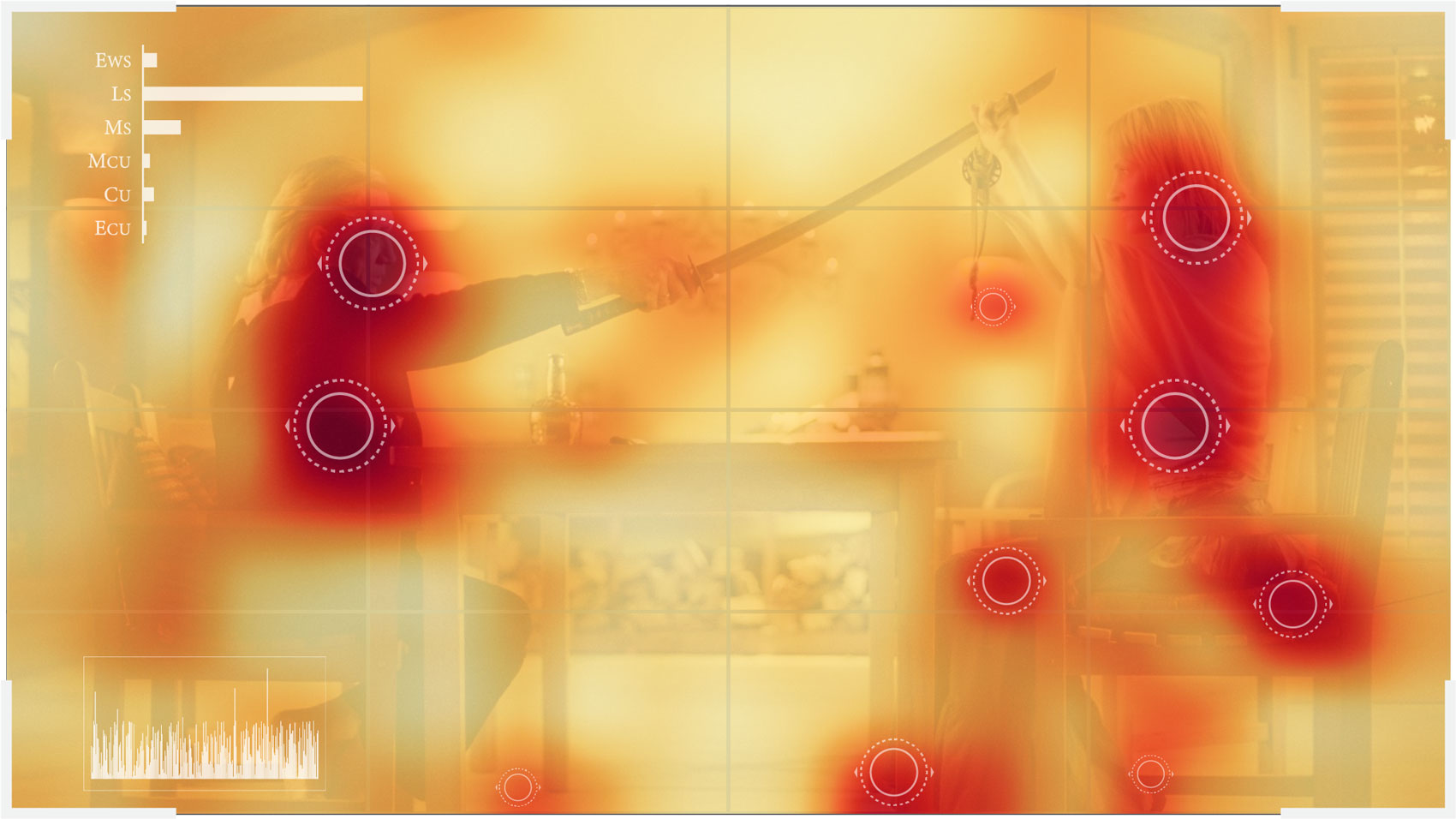

A.I. for Filmmaking - Recognising Shot Types

Blog post introducing the basics of visual language in cinema, and a custom model and dataset built to recognise different types of framing.